Discussion utilisateur:Kghbln

→ page for storing reference data

Elastic replication?

Just checked Exclude_page_example to see the query and I can see a couple of recent changes aren't in Elastic because Special:SemanticMediaWiki/elastic shows "Last active replication: 2019-02-02 03:14:38".

Any errors?

- I will have a look. However yesterday I upgraded Elasticseach which was a minor rather than a patch update so there may be a connection. Sit tight. --[[kgh]] (discussion) 2 février 2019 à 10:38 (CET)

- I again ran into and issue due to exeeding the disk watermark:

Elasticsearch\Common\Exceptions\Forbidden403Exception from line 605 of /../w/vendor/elasticsearch/elasticsearch/src/Elasticsearch/Connections/Connection.php: {"error":{"root_cause":[{"type":"cluster_block_exception","reason":"blocked by: [FORBIDDEN/12/index read-only / allow delete (api)];"}],"type":"cluster_block_exception","reason":"blocked by: [FORBIDDEN/12/index read-only / allow delete (api)];"},"status":403} - 30GB of free discspace is just not enough for Elasticsearch. At the same time the server was under attac so it took longer to fix by running12

curl -XPUT -H "Content-Type: application/json" http://localhost:9200/_all/_settings -d '{"index.blocks.read_only_allow_delete": null}'

- Should be resolved now. --[[kgh]] (discussion) 2 février 2019 à 13:37 (CET)

So again everything got out of control. Setting the following is nonsense:

cluster.routing.allocation.disk.watermark.flood_stage: 99% cluster.routing.allocation.disk.watermark.low: 95% cluster.routing.allocation.disk.watermark.high: 97%

I have a disk usage of 91% and Elasticsearch spams the error log with high and low disk warnings. Now I have set absolute gb:

cluster.routing.allocation.disk.watermark.flood_stage: 5gb cluster.routing.allocation.disk.watermark.low: 20gb cluster.routing.allocation.disk.watermark.high: 10gb

Let's see what happens. If this fails I am afraind not to be able to serve SMW with Elasticsearch on this server. :( --[[kgh]] (discussion) 3 février 2019 à 21:48 (CET)

- It is still failing however for another reason

[2019-02-04T05:46:37,800][DEBUG][o.e.a.s.TransportSearchAction] [Ql8wRCh] All shards failed for phase: [query]

org.elasticsearch.index.query.QueryShardException: failed to create query: {

"constant_score" : {

"filter" : {

"bool" : {

"must" : [

{

"range" : {

"P:10.wpgField" : {

"from" : "O:35:\"SMW\\DataModel\\ContainerSemanticData\":10:{s:11:\"\u0000*\u0000mSubject\";O:14:\"SMW\\DIWikiPage\":9:{s:10:\"\u0000*\u0000m_dbkey\";s:17:\"SMWInternalObject\";s:14:\"\u0000*\u0000m_namespace\";i:-1;s:14:\"\u0000*\u0000m_interwiki\";s:0:\"\";s:18:\"\u0000*\u0000m_subobjectname\";s:3:\"int\";s:23:\"\u0000SMW\\DIWikiPage\u0000sortkey\";N;s:32:\"\u0000SMW\\DIWikiPage\u0000contextReference\";N;s:28:\"\u0000SMW\\DIWikiPage\u0000pageLanguage\";N;s:18:\"\u0000SMW\\DIWikiPage\u0000id\";i:0;s:20:\"\u0000SMWDataItem\u0000options\";N;}s:14:\"\u0000*\u0000mProperties\";a:2:{s:6:\"_LCODE\";O:14:\"SMW\\DIProperty\":5:{s:21:\"\u0000SMW\\DIProperty\u0000m_key\";s:6:\"_LCODE\";s:25:\"\u0000SMW\\DIProperty\u0000m_inverse\";b:0;s:33:\"\u0000SMW\\DIProperty\u0000propertyValueType\";s:7:\"__lcode\";s:25:\"\u0000SMW\\DIProperty\u0000interwiki\";s:0:\"\";s:20:\"\u0000SMWDataItem\u0000options\";N;}s:5:\"_TEXT\";O:14:\"SMW\\DIProperty\":5:{s:21:\"\u0000SMW\\DIProperty\u0000m_key\";s:5:\"_TEXT\";s:25:\"\u0000SMW\\DIProperty\u0000m_inverse\";b:0;s:33:\"\u0000SMW\\DIProperty\u0000propertyValueType\";s:4:\"_txt\";s:25:\"\u0000SMW\\DIProperty\u0000interwiki\";s:0:\"\";s:20:\"\u0000SMWDataItem\u0000options\";N;}}s:12:\"\u0000*\u0000mPropVals\";a:2:{s:5:\"_TEXT\";a:1:{s:120:\"Used in cases where a URL property needs to be exported as an \"owl:AnnotationProperty\". It is a variant of datatype URL.\";O:9:\"SMWDIBlob\":2:{s:11:\"\u0000*\u0000m_string\";s:120:\"Used in cases where a URL property needs to be exported as an \"owl:AnnotationProperty\". It is a variant of datatype URL.\";s:20:\"\u0000SMWDataItem\u0000options\";N;}}s:6:\"_LCODE\";a:1:{s:2:\"en\";O:9:\"SMWDIBlob\":2:{s:11:\"\u0000*\u0000m_string\";s:2:\"en\";s:20:\"\u0000SMWDataItem\u0000options\";N;}}}s:19:\"\u0000*\u0000mHasVisibleProps\";b:1;s:19:\"\u0000*\u0000mHasVisibleSpecs\";b:1;s:16:\"\u0000*\u0000mNoDuplicates\";b:1;s:55:\"\u0000SMW\\DataModel\\ContainerSemanticData\u0000skipAnonymousCheck\";b:1;s:18:\"\u0000*\u0000subSemanticData\";O:29:\"SMW\\DataModel\\SubSemanticData\":2:{s:38:\"\u0000SMW\\DataModel\\SubSemanticData\u0000subject\";r:2;s:46:\"\u0000SMW\\DataModel\\SubSemanticData\u0000subSemanticData\";a:0:{}}s:10:\"\u0000*\u0000options\";N;s:16:\"\u0000*\u0000extensionData\";a:1:{s:9:\"sort.data\";s:123:\"Used in cases where a URL property needs to be exported as an \"owl:AnnotationProperty\". It is a variant of datatype URL.;en\";}}",

"to" : null,

"include_lower" : false,

"include_upper" : true,

"boost" : 1.0

}

}

}

],

"adjust_pure_negative" : true,

"boost" : 1.0

}

},

"boost" : 1.0

}

}

at org.elasticsearch.index.query.QueryShardContext.toQuery(QueryShardContext.java:324) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.index.query.QueryShardContext.toQuery(QueryShardContext.java:307) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.search.SearchService.parseSource(SearchService.java:806) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.search.SearchService.createContext(SearchService.java:656) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.search.SearchService.createAndPutContext(SearchService.java:631) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.search.SearchService.executeQueryPhase(SearchService.java:388) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.search.SearchService.access$100(SearchService.java:126) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.search.SearchService$2.onResponse(SearchService.java:360) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.search.SearchService$2.onResponse(SearchService.java:356) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.search.SearchService$4.doRun(SearchService.java:1117) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:759) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.common.util.concurrent.TimedRunnable.doRun(TimedRunnable.java:41) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-6.6.0.jar:6.6.0]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_181]

Caused by: java.lang.IllegalArgumentException: input automaton is too large: 1001

at org.apache.lucene.util.automaton.Operations.isFinite(Operations.java:1037) ~[lucene-core-7.6.0.jar:7.6.0 719cde97f84640faa1e3525690d262946571245f - nknize - 2018-12-07 14:44:20]

...

So the reason is "Caused by: java.lang.IllegalArgumentException: input automaton is too large: 1001" meaning that Elasticsearch tries to do more than 1000 write operations a second which is in turn a limit that the ISP imposed on Saturday due to the wiki causing 50% of the total load at their end. I will now have to find out how to restrict write operatins per second. I am wondering why software tries to do this in the first place. --[[kgh]] (discussion) 4 février 2019 à 10:03 (CET)

This is going to be fun, e.g.

- https://github.com/projectblacklight/blacklight/issues/1972

- http://lucene.472066.n3.nabble.com/solr-5-2-gt-7-2-suggester-failure-td4383551.html

From quickly reading though the issues I think that the field "wpgField" is too long thus causing loops. --[[kgh]] (discussion)

We have two issues here. Once the disk space issue is resolved (it continues to appear even with settings which should prevent this - another story though) the second issue is spamming the elasticsarch error log and at the same time preventing replication. Is there something SMW can do? --[[kgh]] (discussion) 9 février 2019 à 10:16 (CET)

- First of all, the query above that causes "All shards failed for phase: [query] org.elasticsearch.index.query.QueryShardException: failed to create query" shouldn't be there at all because it is a query on a change propagation for the `P:10.wpgField` property (which is `P` for property, 10 for the ID which is a fixed ID for `_PDESC`, and `wpgField` for the specific field that is queried. We should find out from where this query is send.

- Just pulled in f8df5ed. Thanks a lot for the effort you put into this. I will have to leave now so tomorrow I will have a look and report more findings.

- The transgressing content:

[2019-02-10T10:15:24,853][DEBUG][o.e.a.b.TransportShardBulkAction] [Ql8wRCh] [smw-data-0210020150926-02100_-v1][1] failed to execute bulk item (index) index {[smw-data-0210020150926-02100_-v1][data][20782], source[{"subject":{"title":"Project","subobject":"","namespace":0,"interwiki":"","sortkey":"Project"},"P:20783":{"wpgField":["Project"],"wpgID":[20782]},"P:30":{"datField":[2458078.425463],"dat_raw":["1\/2017\/11\/20\/22\/12\/40\/0"]},"P:32":{"wpgField":["Kghbln"],"wpgID":[32941]},"P:29":{"datField":[2458078.425463],"dat_raw":["1\/2017\/11\/20\/22\/12\/40\/0"]},"P:31":{"booField":[false]},"P:32948":{"wpgField":["Kghbln"],"wpgID":[32941]},"P:32947":{"wpgField":["Kghbln"],"wpgID":[32941]},"P:32949":{"numField":[1.0]},"P:32946":{"numField":[2796.0]},"P:14306":{"numField":[20.0]},"P:32945":{"numField":[8073.0]},"P:14307":{"numField":[19.0]}}]}

org.elasticsearch.ElasticsearchTimeoutException: Failed to acknowledge mapping update within [30s]

at org.elasticsearch.cluster.action.index.MappingUpdatedAction.updateMappingOnMaster(MappingUpdatedAction.java:88) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.cluster.action.index.MappingUpdatedAction.updateMappingOnMaster(MappingUpdatedAction.java:78) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction$ConcreteMappingUpdatePerformer.updateMappings(TransportShardBulkAction.java:520) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction.lambda$executeIndexRequestOnPrimary$5(TransportShardBulkAction.java:467) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction.executeOnPrimaryWhileHandlingMappingUpdates(TransportShardBulkAction.java:490) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction.executeIndexRequestOnPrimary(TransportShardBulkAction.java:461) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction.executeBulkItemRequest(TransportShardBulkAction.java:216) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction.performOnPrimary(TransportShardBulkAction.java:159) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction.performOnPrimary(TransportShardBulkAction.java:151) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction.shardOperationOnPrimary(TransportShardBulkAction.java:139) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.bulk.TransportShardBulkAction.shardOperationOnPrimary(TransportShardBulkAction.java:79) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.TransportReplicationAction$PrimaryShardReference.perform(TransportReplicationAction.java:1051) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.TransportReplicationAction$PrimaryShardReference.perform(TransportReplicationAction.java:1029) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.ReplicationOperation.execute(ReplicationOperation.java:102) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.TransportReplicationAction$AsyncPrimaryAction.runWithPrimaryShardReference(TransportReplicationAction.java:425) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.TransportReplicationAction$AsyncPrimaryAction.lambda$doRun$0(TransportReplicationAction.java:371) ~[elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.ActionListener$1.onResponse(ActionListener.java:60) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.index.shard.IndexShardOperationPermits.acquire(IndexShardOperationPermits.java:273) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.index.shard.IndexShardOperationPermits.acquire(IndexShardOperationPermits.java:240) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.index.shard.IndexShard.acquirePrimaryOperationPermit(IndexShard.java:2352) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.TransportReplicationAction.acquirePrimaryOperationPermit(TransportReplicationAction.java:988) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.TransportReplicationAction$AsyncPrimaryAction.doRun(TransportReplicationAction.java:370) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.TransportReplicationAction$PrimaryOperationTransportHandler.messageReceived(TransportReplicationAction.java:325) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.action.support.replication.TransportReplicationAction$PrimaryOperationTransportHandler.messageReceived(TransportReplicationAction.java:312) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.transport.RequestHandlerRegistry.processMessageReceived(RequestHandlerRegistry.java:66) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.transport.TransportService$7.doRun(TransportService.java:687) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:759) [elasticsearch-6.6.0.jar:6.6.0]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-6.6.0.jar:6.6.0]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_181]

- --[[kgh]] (discussion) 10 février 2019 à 12:22 (CET)

- Interesting,and for the subject "Project" (see {"title":"Project","subobject":"","namespace":0,"interwiki": ...) the check will let you known.

- I manually purge the page and it is synced with the ES backend without any issue which means that ES at that time couldn't response within "...update within [30s]" and threw an exception when the time was up.

- As for "org.elasticsearch.ElasticsearchTimeoutException: Failed to acknowledge mapping update within" https://discuss.elastic.co/t/an-error-while-bulk-indexing/69404, https://github.com/elastic/elasticsearch/issues/37263 has some hints.

- Interesting,and for the subject "Project" (see {"title":"Project","subobject":"","namespace":0,"interwiki": ...) the check will let you known.

- " ... is spamming the elasticsarch error log " there are probably some ES settings to reduce the amount of noise. Not sure about the `'throw.exception.on.illegal.argument.error'` in DefaultSettings.php which only applies to the indexing, what happens when you turn this off (aka set it `false`)?

- Just changed the setting. Let's see tomorrow, what happens. --[[kgh]] (discussion) 9 février 2019 à 18:14 (CET)

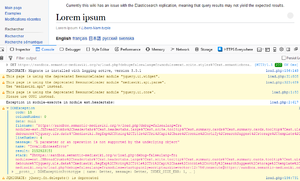

Headertabs extension?

Unless there is a compelling reason to have Headertabs enabled, I rather see it go because it causes issues for other JS when it spurs errors like shown above especially when the resource isn't needed (or used) for a specific page.